Three years ago a national credit union hired me to collect some "hard-to-reach public data." Specifically, they wanted to mass-gather Peoria and surrounding county’s public records on commercial real estate to learn details about potential customers that would help them sell loans. Here is the painful true story of getting that data (which I mapped here).

Note: Given the terms of my agreement with this client, I have always been at liberty to share the following.

Recognizing an opportunity

Let's set the stage. Bankers know that there are really just three times a business switches banks:

- When their bank is acquired by another bank.

- When their account manager dies.

- When their loans have matured.

In scenarios 1 and 2, a business is likely to switch to another bank because they no longer get the level of service they're accustomed to. Imagine an older business owner not being able to visit their nearest branch because it was closed to "boost synergies." They're not happy. For scenario 3, it's pertinent to know that commercial loans, unlike residential mortgages, often mature before the entire balance is paid off. This is a contractually built-in opportunity for banks to force a borrower to renew the loan with another bank if the account hasn't been worth their while. At the same time, it's also the best opportunity a borrower has to shop around for better terms.

To the regular person this doesn't mean that much but to banks these critical moments can mean losing very valuable clients. On the flip side, if you are a lending institution able to identify a business, or a portfolio of businesses, going through these critical moments at a rival bank, then you could leverage that information to win a lot of clients. Or so the theory goes.

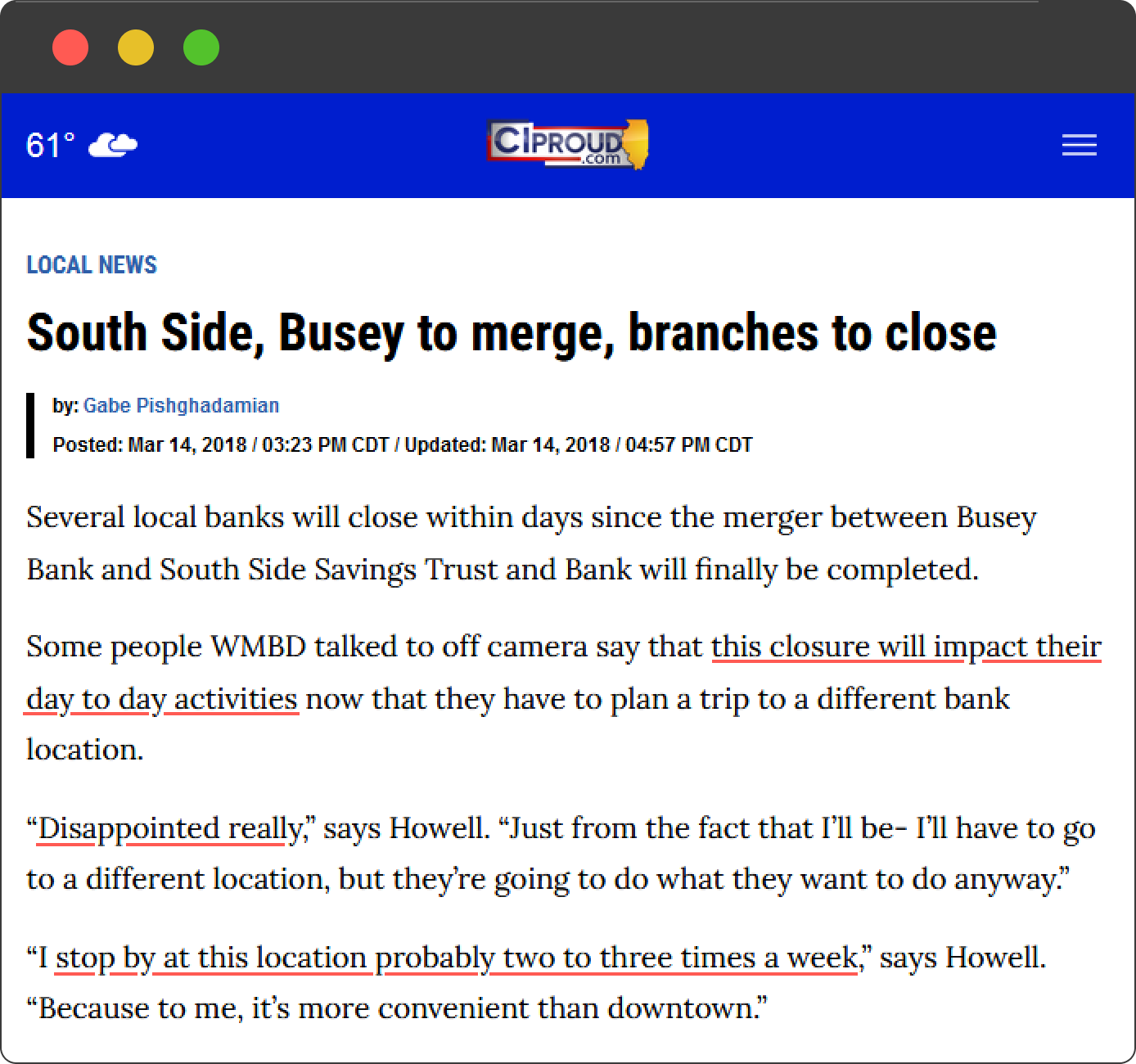

The opportunity to test it had arrived. At the time, Busey Bank was in the process of acquiring Peoria's South Side Savings & Trust Bank. Founded in 1922, South Side Bank had more than $662 million in bank assets and nearly $585 million in trust assets. The merger was scheduled to complete 10 months in the future. My client needed a detailed list of South Side's customers with their net worth, upcoming loan maturity dates, and terms. If we acted fast, my client would have the list and several months to strategically build relationships with businesses primed to switch to them. Ready... set...

Let the nightmare begin

In the simplest case, the way to find a single maturity date in a single loan filing within a county is to:

- Visit the website of the office of the recorder. Here's Peoria's.

- Filter your search to match on mortgages.

- Open its PDF and

CTRL+Fsearch for the maturity date.

Not too bad, right? Nice. Now repeat that at least 10,000 times to get all of them.

The moment you want to do anything en masse, literally hundreds of issues spring up from seemingly nowhere. I'll spare you the details but here were the big ones.

Don't DDoS where you eat.

When you write a computer program to scrape any public data set, you have to space apart automated requests so that the target server is not overloaded. This means that you can't just spin up 10,000 virtual Chrome browsers, have them all visit the same website at the same time, and be done collecting in 10 seconds. Doing that would be a Distributed Denial of Service (DDoS) attack which is a really bad thing. That means that, despite the expectation for computers to deliver instant results, scraping results come anything but instant. Depending on the amount of data you want to collect, and the capacity of the website handling your requests, it can take days or weeks to responsibly collect the information you need. For this project, programming the scrapers, tuning, and running them took about 32 hours.

The Recorder's website doesn't indicate whether a property is commercial or residential.

Run a mortgage search on Peoria's Office of the Recorder website and only about 1 out of 10 results will be for a commercial loan. Sadly, the way to identify the right ones isn't easily automated. Fortunately, Peoria County's Office of Assessments maintains the Property Tax database which does label parcels as either commercial or not. So it was easier to write a Nightmare.js scraper to download all 80,000 tax records, filter them down to commercial ones, then use that list to seed automated searches against the Recorder's website.

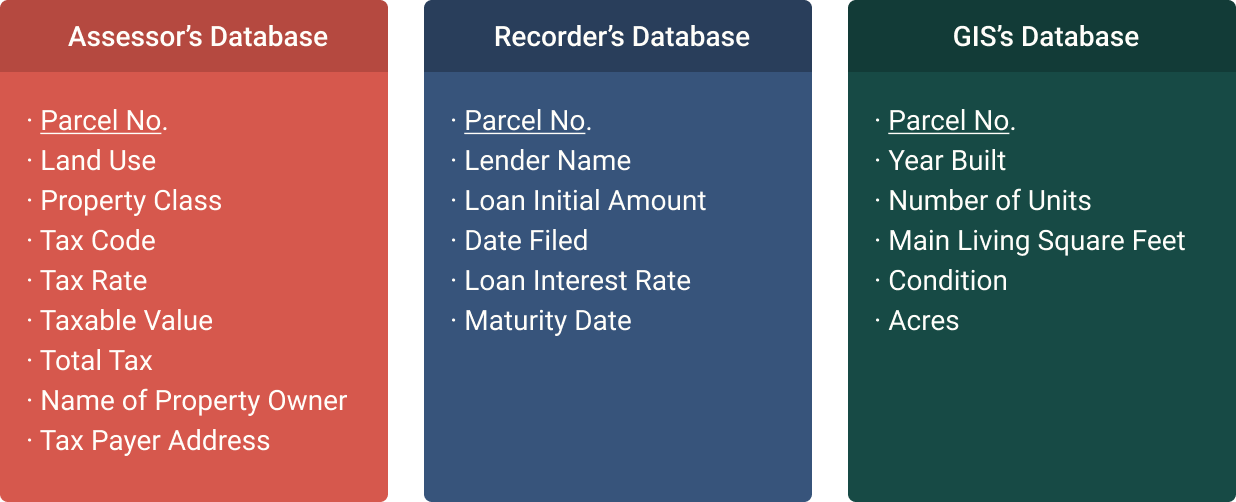

Enrich the dataset... more.

Even though I had lender and borrower information from the Recorder's database merged with tax fields from the Assessor's database, my client also needed parcel details like the year its structures were built, square footage, and condition. The only other source to provide these was Peoria County GIS. So after a little inspection of their Javascript API, I was able to write a Python script to extract this data too. All told, the deliverable aggregated about 20 data points across these three databases joined by Parcel Number, as shown below.

3. Open the PDFs and search for maturity dates

Search for something that cannot be searched

This is when things got difficult. Each of the 10,000 contracts extracted from the Office of the Recorder were about 13 pages long. The mission: find four snippets of text in each contract. That means we needed to find about 40,000 data points buried across 125,000 contract pages.

For perspective, if you lay those many pages on the floor - side to side as if to read them left to right in one go - you would have to walk 17 miles to get to the end.

If every contract had the same format there would be a fairly straightforward solution to this: use regular expression pattern matching to extract the target data. But almost none of the contracts followed the same format, even among those from the same lender. So instead I would have to develop a more robust parser to automatically extract text and deduce whether it represented a loan's maturity date, tax rate, initial amount, or lender name. This was doable but there was just one more problem - most contracts were not machine-readable. They were just unsearchable scanned-in copies. In effect I needed to come up with a way to CTRL+F 125,000 pictures.

The process of converting a scanned document into one with searchable words is called Optical Character Recognition (OCR) and it’s something most scanners nowadays can do automatically. But OCR is computationally intensive and it would have to be quite accurate to extract the data I needed. Good OCR is also really expensive so I figured out a way to make the cheapest version of the world’s best OCR software convert 125,000 loan documents. It took 6 days to process on a laptop.

Now that I had machine-readable contracts, I developed a parser to extract the data of interest. But I quickly ran into a new problem - a lot of the text wasn’t converted correctly. As it turns out, even the world’s best OCR has a failure rate of about 5%, so text that should’ve converted to say a 4 were actually converted into an A, or an 8 converted into a B. All of this makes it darn near impossible to accurately extract 40,000 names and numbers from 10,000 loan contracts.

Lead a crowd

So I began to think of a new solution. If I couldn’t extract the data I needed with a machine, then maybe I could do it with humans – lots of humans. I realized that I would have to manage a crowd. Crowdsourcing is pretty amazing. It's how Stanford University uses idle personal computers owned by volunteers from all over the world to cure diseases. It's how Google improves their language translation services and maps. What you never hear about crowdsourcing is how much of a pain it is to manage. Managing hundreds of remote workers comes with three big hurdles:

- Hundreds of workers will make honest mistakes all the time.

- Workers will sometimes cheat or lie on a task to get through it faster to raise their earnings per hour.

- You have to stay within budget despite the above.

So the question one has to answer when crowdsourcing transcription is, “How do I obtain a high quality product produced by an untrustworthy workforce?” That's a hard question to answer and one I wrestled with for a while. What I settled on was to design a system of production such that workers didn't need to be trusted. In effect, I had to build the ReCAPTCHA system.

Most people aren't aware that they're a worker in a massive, long running crowdsourcing project called ReCAPTCHA. Sure, people are all too familiar with that annoying website prompt asking to prove they're human. The part most folks don't know is that when you type in those psychedelic looking words, you've just freely transcribed hard-to-read text from a real book or street sign. Your Internet browsing was hijacked to turn you into a human OCR. The developers of this system realized that if they distributed the same hard-to-read prompt to enough people, and enough of them transcribed the prompt the same way, then the actual text in the prompt must be what most of the humans said it was. Amazingly, the developers can do this all without knowing what the prompt says or even trusting the humans involved. Consensus alone produces the right answer.

So to solve my data transcription problem, I built a mini ReCAPTCHA system and deployed it on Amazon Mechanical Turk. When said and done I managed over 200 remote workers over the course of a week to accurately transcribe over 8,000 data fields from Peoria's commercial loan contracts. To wrap up the project I was able to extract all remaining data using programmatic means.

The final product

For all the work that went into curating this dataset, you would think it would be stored in a MySQL database somewhere in the cloud. This time, reality asked for something easy - an Excel file because that worked perfectly for the client. It looked a lot like this:

| Parcel No | Land Use | Property Class | Tax Code | Tax Rate | Taxable Value | Total Tax | Assessor's URL | Owner1 Name | Owner1 Address | Owner2 Name | Owner2 Address | Owner3 Name | Owner3 Address | Owner4 Name | Owner4 Address | Property Address | Lender | Principal | Filed Date | Interest Rate | Maturity Date | Year Built | Condition | Acres | Main Living Sqft | Value Per Sqft | Township | Recorder's URL | GIS URL | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1808102037 | Residential Non Owner Occupied | 0040 - Improved Lots | 1 | 9.55927 | 31590 | 3145.78 | http://propertytax.peoriacounty.org/parcel/view/1808102037/2015 | MOSS BRADLEY REVOLVING FUND | C/O MARJORIE KLISE 1807 MOSS AVE PEORIA, IL, 61606 | 1721 W BARKER AVE PEORIA, IL 61606 | BUSEY BANK | 66781.81 | 2013-10-03 14:35:00 | 1920.0 | Fair | 0.222 | 1416.0 | 10.24 | City of Peoria | https://recorder.peoriacounty.org/recorder/eagleweb/viewDoc.jsp?node=DOCCGR-1218819 | http://gis.peoriacounty.org/peoriagis/?pin=1808102037 | ||||||||

| 1 | 831300003 | Farm Non Owner Occupied | 0011 - Homesite-Dwelling | 505 | 6.96702 | 13394 | 933.16 | http://propertytax.peoriacounty.org/parcel/view/0831300003/2013 | WEAVER ENTERPRISES | 10216 W CIVIL DEFENSE RD BRIMFIELD, IL, 615179444 | HEARTLAND BANK | 1550000.0 | 2011-05-31 09:44:00 | 0.0 | 160.005 | 0.0 | Radnor | https://recorder.peoriacounty.org/recorder/eagleweb/viewDoc.jsp?node=DOCCGR-1048196 | http://gis.peoriacounty.org/peoriagis/?pin=0831300003 | |||||||||||

| 2 | 806400009 | Farm Owner Occupied | 0011 - Homesite-Dwelling | 500 | 6.66334 | 54498 | 3631.4 | http://propertytax.peoriacounty.org/parcel/view/0806400009/2012 | JAMES H WITHERS | 9121 W PARKS SCHOOL RD PRINCEVILLE, IL, 61559 | MARY P WITHERS | 9121 W PARKS SCHOOL RD PRINCEVILLE, IL, 61559 | 9121 W PARKS SCHOOL RD PRINCEVILLE, IL 61559 | BETTER BANKS | 100000.0 | 2010-08-31 12:05:00 | 1971.0 | Good | 10.573 | 1000.0 | 26.35 | Radnor | https://recorder.peoriacounty.org/recorder/eagleweb/viewDoc.jsp?node=DOCCGR-995625 | http://gis.peoriacounty.org/peoriagis/?pin=0806400009 | ||||||

| 3 | 1312351004 | Commercial | 0060 - Improved Commercial | 1 | 8.95549 | 300540 | 26914.84 | http://propertytax.peoriacounty.org/parcel/view/1312351004/2012 | METHODIST SERVICES INC | 221 NE GLEN OAK AVE PEORIA, IL, 61636 | 6831 N PEAR TREE LN PEORIA, IL 61615 | 742500.0 | 2010-02-26 11:37:00 | 0.0 | 2.003 | 0.0 | City of Peoria | https://recorder.peoriacounty.org/recorder/eagleweb/viewDoc.jsp?node=DOCCGR-959997 | http://gis.peoriacounty.org/peoriagis/?pin=1312351004 | |||||||||||

| 4 | 1434130006 | Residential Non Owner Occupied | 0030 - Vac Lots-Lands | 1 | 0.0 | 0 | 0.0 | http://propertytax.peoriacounty.org/parcel/view/1434130006/2016 | MAXHOME LLC | C/O GARY NEUMANN, MANAGER 19760 WESLEY ST DOWNS, IL, 61736 | E MAYWOOD AVE PEORIA, IL 61603 | CITIZENS EQUITY FIRST CREDIT UNION | 604800.0 | 2016-04-18 13:44:08 | 2026-05-01 00:00:00 | City of Peoria | https://recorder.peoriacounty.org/recorder/eagleweb/viewDoc.jsp?node=DOC124S221 | http://gis.peoriacounty.org/peoriagis/?pin=1434130006 | ||||||||||||

| 5 | 518101015 | Farm Non Owner Occupied | 0021 - Farmland | 100 | 6.82214 | 3068 | 209.3 | http://propertytax.peoriacounty.org/parcel/view/0518101015/2009 | STEVEN T MILLER | 3211 E ROME WEST RD CHILLICOTHE, IL, 61523 | ROBERTA J MILLER | 3211 E ROME WEST RD CHILLICOTHE, IL, 61523 | E HART LN CHILLICOTHE, IL 61523 | STATE BANK OF SPEER | 99000.0 | 2007-11-12 13:02:00 | 7.25 | 0.0 | 19.794 | 0.0 | Chillicothe | https://recorder.peoriacounty.org/recorder/eagleweb/viewDoc.jsp?node=DOCCGR-307663 | http://gis.peoriacounty.org/peoriagis/?pin=0518101015 | |||||||

| 6 | 1422326003 | Residential Non Owner Occupied | 0040 - Improved Lots | 207 | 9.099 | 17420 | 1585.06 | http://propertytax.peoriacounty.org/parcel/view/1422326003/2010 | 1004 E GLEN AVE PEORIA HEIGHTS, IL 61616 | HEIGHTS BANK | 20000.0 | 2008-07-11 16:23:00 | 2013-07-11 00:00:00 | 1920.0 | Fair | 0.172 | 895.0 | 15.22 | Richwoods | https://recorder.peoriacounty.org/recorder/eagleweb/viewDoc.jsp?node=DOCCGR-333818 | http://gis.peoriacounty.org/peoriagis/?pin=1422326003 | |||||||||

| 7 | 614300020 | Farm Owner Occupied | 0011 - Homesite-Dwelling | 851 | 7.0516 | 42505 | 2997.28 | http://propertytax.peoriacounty.org/parcel/view/0614300020/2009 | LYNN OPPE | 12806 N WHITTAKER RD BRIMFIELD, IL, 615179767 | DENA M LEWIS | 12806 N WHITTAKER RD BRIMFIELD, IL, 61517 | 12806 N WHITTAKER RD BRIMFIELD, IL 61517 | CEFCU | 50000.0 | 2007-07-25 13:30:00 | 2001.0 | Fair | 6.445 | 1512.0 | 26.42 | Brimfield | https://recorder.peoriacounty.org/recorder/eagleweb/viewDoc.jsp?node=DOCCGR-297452 | http://gis.peoriacounty.org/peoriagis/?pin=0614300020 | ||||||

| 8 | 1717300015 | Farm Owner Occupied | 0011 - Homesite-Dwelling | 369 | 8.18159 | 33781 | 2763.82 | http://propertytax.peoriacounty.org/parcel/view/1717300015/2016 | 8709 W JOHNSON FARM RD PEORIA, IL 61607 | MORTON COMMUNITY BANK | 89930.5 | 2016-05-13 15:29:15 | 1947.0 | Average | 7.318 | 1469.0 | 23.88 | Limestone | https://recorder.peoriacounty.org/recorder/eagleweb/viewDoc.jsp?node=DOC128S157 | http://gis.peoriacounty.org/peoriagis/?pin=1717300015 | ||||||||||

| 9 | 1605100010 | Farm Owner Occupied | 0011 - Homesite-Dwelling | 703 | 7.82556 | 55111 | 4312.74 | http://propertytax.peoriacounty.org/parcel/view/1605100010/2013 | JOEL P WOERNER | 16122 W SOMMERFIELD RD TRIVOLI, IL, 61569 | MELISSA L NELSON-WOERNER | 16122 W SOMMERFIELD RD TRIVOLI, IL, 61569 | 16122 W SOMMERFIELD RD TRIVOLI, IL 61569 | CEFCU | 146950.0 | 2011-06-29 11:19:00 | 2026-07-01 00:00:00 | 2003.0 | Good | 9.621 | 1568.0 | 35.52 | Logan | https://recorder.peoriacounty.org/recorder/eagleweb/viewDoc.jsp?node=DOCCGR-1053800 | http://gis.peoriacounty.org/peoriagis/?pin=1605100010 |

At this point my job was done. The client used this data to identify and connect with high net worth businesses - many of which banked at South Side Savings & Trust.

Fast forward 10 months

As predicted, customers involved in the Busey-South Side acquisition would get hot and bothered. What I hadn't seen coming (but probably should have) was the number of Busey specific customers that would get affected by the merger - at least four Busey branches closed. In all my talks with bankers since this project, and I've spoken with many, I don't recall a single one ever talking about the business opportunity of pursing the irate customers of an acquiring bank. Instead, when the topic comes up, the focus is typically on pursuing leads from the institution to be acquired - as in how this entire project had been predicated to me. That's really curious.

On the other hand, now I see that it shouldn't have been a surprise that Busey customers were going to get a little "forgotten." Since banks know that acquisitions are a vulnerable time, it may be that they spend additional resources ensuring they lose as few accounts possible from their new branches. But in the process of doing that, they lose focus on the needs of the customers they already have. And that's always a recipe for churn.

How did the client do?

The database I prepared was used to identify high-value businesses banking with South Side Bank and Trust. I was told my client started things off with targeted mailers, followed by phone calls, which probably turned into lunch invitations and so on. Last I checked in it sounded like our project had effectively paid for itself but not too much beyond. Honestly, it's kind of a flat ending for a project that personally had so many twists and turns. Wish I could have said "my leads list netted them 15 million dollars" but I honestly can't.

On the other hand, I know bankers who have pursued specific clients for a long time. One gentleman tells me he has a business he's been trying to sign for 15 years. Another told me that there was a business in town that his third predecessor (think grandpa banker) had begun to woo over and it wasn't until it got to him - 18 years later, that they finally got the account. So maybe this story isn't finished yet. Maybe there's a grandson banker 20 years down the road who ends up catching a "big fish" because of an Excel sheet I made in 2017. Maybe.

Finally, the map.

From initial consultation to having all this data took about 2 months. Making the map took about 4 hours. Such is the tale of your average data project:

85% of the time is spent wrangling data versus only about 15% for analysis.

But analyze I did. To make the map I merged my dataset with the All Tax Districts shapefile available on Peoria County's open data portal. This was uploaded to Mapbox where each parcel could be styled and 3-dimensionally extruded based on its mortgage principal. I decided to add interactivity using a scrolling panel that pans and zooms to top parcels of interest.

When scrolling through these examples, we see something odd - There's a Hardee's that cost $2 billion? I address this question and others in the full write up at I Mapped $63 Billion of Commercial Loans in Peoria County.

Hire me

If you liked this article, feel like you need to politely challenge it, or would like to hire me to run a data project, then let me know what you're thinking in the forum below (which has a private messaging option, too, if that's more your style).